react/redux to elm feature comparison: playing morse sounds

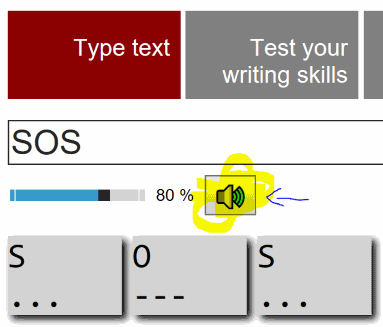

This post is about how the feature of listening to morse sounds when pressing the button…

…is implemented.

react/redux

Let’s start with the user interaction in the UI:

<input type="button" className="soundButton" value="🔊" onClick={playSound} />playSound is an action that is surfaced through react-redux’ connect-functionality. The action looks like that:

export function playSound() { return async (dispatch, getState) => { const { userInput, soundSpeed } = getState().typing; textAsMorseSound(userInput, soundSpeed); };}This type of function is supported by the redux-thunk middleware, which provides the dispatch and getState functions to be able to perform side-effects within a user-UI interaction. Digging deeper:

export async function textAsMorseSound(input, soundSpeed) { for (let c of Array.from(input)) { var code = charToMorseCode(c); await asyncPlayMorse(Array.from(code), soundSpeed); await delay(DASH_LENGTH / soundSpeed); // Pause between chars }}//...export function delay(millisecs, value = null) { return new Promise((res, rej) => { setTimeout(() => res(value), millisecs); });}The async/await, part of ES2017, but already available through babeljs-transpiling, allows you to work with promises much like you do with Tasks in the post .NET 4.5 world. This makes the code that performs the necessary time delays much easier to read.

// characters: Array of strings like "-" and "." and " "export async function asyncPlayMorse(characters, soundSpeed = 1) { for (let c of characters) { switch (c) { case ".": startSound(); await delay(DOT_LENGTH / soundSpeed); stopSound(); await delay(DOT_LENGTH / soundSpeed); break; case "-": startSound(); await delay(DASH_LENGTH / soundSpeed); stopSound(); await delay(DOT_LENGTH / soundSpeed); break; case " ": await delay(PAUSE / soundSpeed); break; default: console.log(c); break; } }}Finally startSound and stopSound connect and disconnect the oscillator that is set up to the audio output of the browser.

elm

As you can imagine, not every single browser API is surfaced to elm - hence the concept of defining ports, a subsystem that allows you to interop to plain javascript and its access to all of the browser’s API. Therefore, we go ahead and define ports to start and stop the sound:

port audioOn : () -> Cmd msgport audioOff : () -> Cmd msgIn javascript, we need to fill these ports with life:

export function initAudioPort(elmApp) { let isConnected = false; elmApp.ports.audioOn.subscribe(function() { if (isConnected) return; oscillator.connect(audioCtx.destination); isConnected = true; }); elmApp.ports.audioOff.subscribe(function() { if (!isConnected) return; oscillator.disconnect(audioCtx.destination); isConnected = false; });}

// which is used like that:const app = Main.embed(document.getElementById('root'));initAudioPort(app);Now that we have this in place, we can stay in elm to implement the functionality. Let’s start in the UI again:

input [ type_ "button", class "soundButton", onClick OnListenToMorse, value "Play Morse" ] []OnListenToMorseis a defined message that needs to be handled in the update function of your application.

update msg model = case msg of -- stuff OnListenToMorse -> (model, Audio.playWords model.userInput model.morseSpeed)Just like in the redux app, where I didn’t show any reducer code, implying that nothing changes in the application’s model, the only reaction here consists of initiating a side-effect.

What happens in playWords ?

playWords : String -> Float -> Cmd MsgplayWords words factor = stringToMorseSymbols words |> List.map (convertSymbolToCommands factor) |> bringTogether

-- type of stringToMorseSymbols: String -> List MorseSymbol

convertSymbolToCommands: Float -> MorseSymbol -> List (Milliseconds, Msg)convertSymbolToCommands factor symbol = let adapt (millisecs, msg) = (millisecs / factor, msg) adaptAll = List.map adapt in adaptAll <| case symbol of Dot -> playDot Dash -> playDash ShortPause -> playBetweenChars LongPause -> playBetweenWords Garbled -> playBetweenWords

-- example of the "play" functions:

playDot: List (Milliseconds, Msg)playDot = [ (0, (SoundMsg StartSound)), (dotLength, (SoundMsg StopSound)), (pauseBetweenChars, NoOp) ]

-- from tuples to commands

bringTogether : List (List ( Milliseconds, Msg )) -> Cmd MsgbringTogether = List.concat >> List.map toSequenceTuple >> sequenceIn other words, first the user input is converted to a list of morse symbols.

This is then processed to a list of list of tuples that state at

which relative time in milliseconds which message should be piped into the update function (See e.g. Dot -> playDot + applying speed factor).

The final ingredient to this magic is the sequence function from the elm package delay. The code is actually not super-complex,

it does the necessary plumbing to call out to Process.sleep the elm equivalent to javascript’s setTimeout.

What happens then, when the SoundMsg StartSound and StopSound messages are received?

update msg model = case msg of -- stuff SoundMsg msg -> Audio.update msg model

-- In audio update:

update : SoundMsg -> Model -> (Model, Cmd Msg)update msg model = case msg of StartSound -> (model, audioOn ()) StopSound -> (model, audioOff ())And here is where you finally find the calls to the interop ports we defined to connect and disconnect the Oscillator :)

Which route do you prefer?